This know-how gained’t be contained.

That is Atlantic Intelligence, an eight-week sequence by which The Atlantic’s main thinkers on AI will enable you perceive the complexity and alternatives of this groundbreaking know-how. Enroll right here.

Earlier this week, I requested ChatGPT how one can clear a humidifier. Then, annoyed by its reply, I requested it to design a much less demanding humidifier. It did. However once I prompted the AI to estimate the price of such a tool—a couple of hundred {dollars} on the excessive finish—I made a decision to dwell with the 30-minute white-vinegar soak it had advised within the first place. The entire expertise was fast, straightforward, and had the nice tickle of ingenuity: I felt like I’d participated in a inventive course of, slightly than simply wanting one thing up.

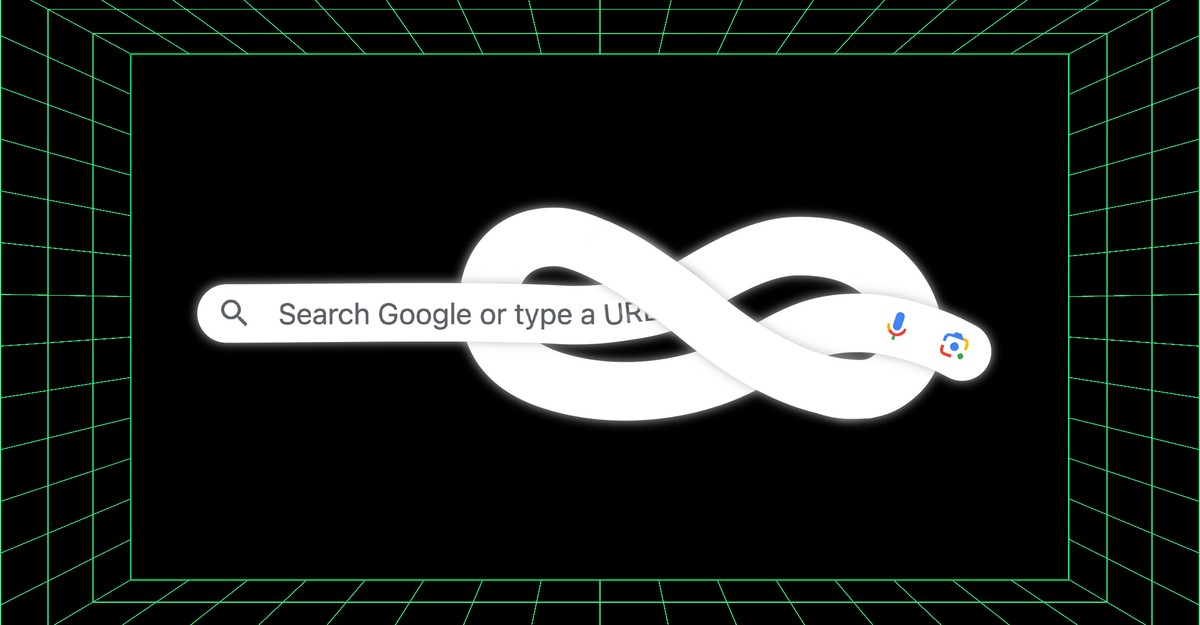

The issue, although, is that I by no means really feel like I can belief a chatbot’s output. They’re inclined to creating issues up and garbling details. These flaws are dangerous sufficient within the context of a stand-alone web site, like ChatGPT, however generative AI is worming its method all through the web. In a brand new article, my colleague Caroline Mimbs Nyce writes about how content material written by generative AI is tripping up Google Search, resulting in nonsensical solutions for some primary queries. That is proof that the know-how gained’t be contained—that it might alter the world in stunning methods, and never at all times for the higher.

— Damon

AI Search Is Turning Into the Downside Everybody Anxious About

By Caroline Mimbs Nyce

There is no such thing as a straightforward method to clarify the sum of Google’s information. It’s ever-expanding. Countless. A rising internet of a whole bunch of billions of internet sites, extra knowledge than even 100,000 of the costliest iPhones mashed collectively might presumably retailer. However proper now, I can say this: Google is confused about whether or not there’s an African nation starting with the letter okay.

I’ve requested the search engine to call it. “What’s an African nation starting with Ok?” In response, the positioning has produced a “featured snippet” reply—a kind of chunks of textual content that you could learn straight on the outcomes web page, with out navigating to a different web site. It begins like so: “Whereas there are 54 acknowledged international locations in Africa, none of them start with the letter ‘Ok.’”

That is mistaken. The textual content continues: “The closest is Kenya, which begins with a ‘Ok’ sound, however is definitely spelled with a ‘Ok’ sound. It’s at all times attention-grabbing to be taught new trivia details like this.”

Given how nonsensical this response is, you may not be shocked to listen to that the snippet was initially written by ChatGPT. However it’s possible you’ll be shocked by the way it turned a featured reply on the web’s preeminent information base.

What to Learn Subsequent

Google’s current troubles are an indication {that a} second anticipated by some specialists has arrived: Media created by generative AI is filling the web, to such an extent that once-reliable instruments are starting to interrupt down. For now, the results are minor—however within the three tales included beneath, we discover the a lot greater challenges which will come subsequent.

- Put together for the textpocalypse: The generative-AI period could also be an period of infinite, supercharged spam, Matthew Kirschenbaum writes.

- Conspiracy theories have a brand new finest good friend: Generative-AI packages like ChatGPT threaten to revolutionize how disinformation spreads on-line, Matteo Wong writes.

- We haven’t seen the worst of faux information: So-called deepfakes, by which an AI program is used to put one individual’s face over one other’s to create misleading media, have been an issue for years. Now the know-how is each extra accessible and extra highly effective, Matteo notes.

P.S.

I’ll go away you on a lighter word: Burritos could be simply the factor to cease the robotic apocalypse, in response to a current story by Jacob Candy. 🌯

— Damon